Inside Angle

From 3M Health Information Systems

Man vs. the Machine

The game of chess made history in 1997 when the IBM computer called Deep Blue beat the world chess champion Garry Kasparov. Deep Blue was not always better than a human chess player. In 1996 the computer lost to Kasparov, but through system improvements, Deep Blue was eventually able to win. Today, individuals are able to better their chess skill set and advance their rankings through practice with computer players.

How did Deep Blue win in 1997? Experts say it was brute force. This means Deep Blue had the ability to process so many moves at such a quick speed, it won purely because it could analyze moves faster than a human player. In the game of chess there are two players and only so many rules. This simplifies the inputs and outputs to any computer working to beat an opponent. However, if there are many inputs and a wide variety of rules (not the confined amount chess has) imagine how the computer can help the player analyze and suggest moves faster than they are normally capable of doing. This would give the human player an advantage over other players.

In the world of computer-assisted coding (CAC), we know there are multiple inputs – different documents, individual coder preference and style, whether the patient was in inpatient or outpatient, if there were complications to the patient once they were admitted, etc. Today, I will expand on the input to computer-assisted coding of individual coders.

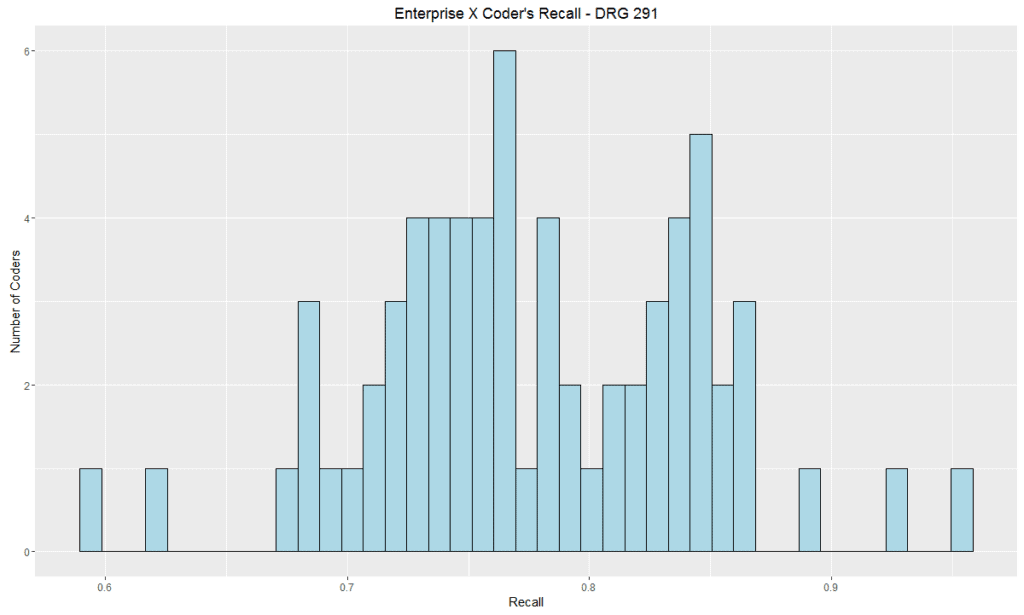

As mentioned in previous blog posts, recall is the percentage of CAC accepted codes out of all final billed codes. In other words, it is the coder agreement rate with the engine. So, if a coder has 60 percent recall, it means the coder and the engine agreed 60 percent of the time. If I narrowed recall to only one specific DRG, would the recall look similar across all coders? One could argue that there are different formats and documents across multiple enterprises that may cause recall to vary. So, if we narrowed our analysis to only one DRG and one enterprise, what would the recall for individual coders look like? The graph below shows this scenario:

Surprisingly, the graph above shows a variation in recall from 60 – 90 percent for DRG 291 at Enterprise X. DRG 291 is heart failure and shock with MCC (major complication or comorbidity) and is one of the more frequently used DRGs. If we look at the graph again, the majority of the coders are between about 71 percent and 87 percent recall. But why such variation?

The CAC engine sees evidence and suggests codes based on the evidence it sees and the rules it knows to be true. So why would coder agreement rate be so different? There could be different types of documents or less evidence for some visits compared to others even within the same enterprise (here I have labeled this enterprise “Enterprise X”). However, it is also possible the coders themselves have different preferences and habits and aren’t coding consistently across the enterprise. Inter-coder agreement has been studied and shown to have a high degree of variability. When we think about this in the context of computer-assisted coding, variability can affect whether or not an enterprise fully realizes all of the value provided by the CAC engine. We know what machines are capable of doing, but it’s also important to consider whether enterprises have standard policies and procedures that help coders be more consistent when coding and use the “machine” to their advantage.

Clarissa George is a business intelligence specialist at 3M Health Information Systems.