Inside Angle

From 3M Health Information Systems

About accuracy…

In our recent posts we’ve touched on both productivity and proper tool usage to help achieve the best possible results with Computer Assisted Coding (CAC). This week I thought I would touch on the role that accuracy of the CAC plays in all of this. While the accuracy of the engine is definitely important, we intentionally did not start our blog series here because in the real world of daily usage, some of the human factors involved in correctly using the system have just as much impact on your experience. It was important to talk about the human factors first to help put the engine accuracy into the proper perspective as part of the overall idea of “Computer Assisted Coding.” So with that being said…. let’s talk about engine accuracy.

A common question our team receives is “How accurate is the CAC engine?” When we are asked this, people are usually expecting a single number as the answer; something like “It is 92 percent accurate.” In reality, it is impossible to give a single accuracy number that will be meaningful, both because a CAC engine cannot be measured in this way and because there are many variables that affect the engine performance in different settings.

First, let’s look at why we can’t sum up the engine’s accuracy with a single number or percentage. As with our previous posts, let me start with an example outside of CAC and work up from there.

In the past few years my children have each had to do science fair projects as a required part of their junior high science class. To their good fortune or dismay (you’d have to ask them which), we’ve done projects that will easily illustrate the concepts I want to demonstrate and so I’m going to borrow from their work. My youngest daughter’s most recent project asked if a person’s ability to remember information was different when presented with information on a printed vs. an electronic screen. (In a teenage world this could be critical in persuading parents to purchase e-readers, tablets and computers!) The experiment went like this:

- Show a subject a list of 20 words and give them 30 seconds to study it. (First List)

- Show the subject a list of 40 words ~ which contains all of the words from the first list, but also includes 20 new words as “noise.” (Second List)

- Ask the subject to identify as many words as possible that they remember having seen in the first list. (Result)

She designed the experiment this way so that it wasn’t purely a memorization test and more like a subject might experience when trying to learn or remember in the real world. Measuring the results is where this gets interesting because there is not “one” number that can completely describe how well the subject did. Let’s look at an example.

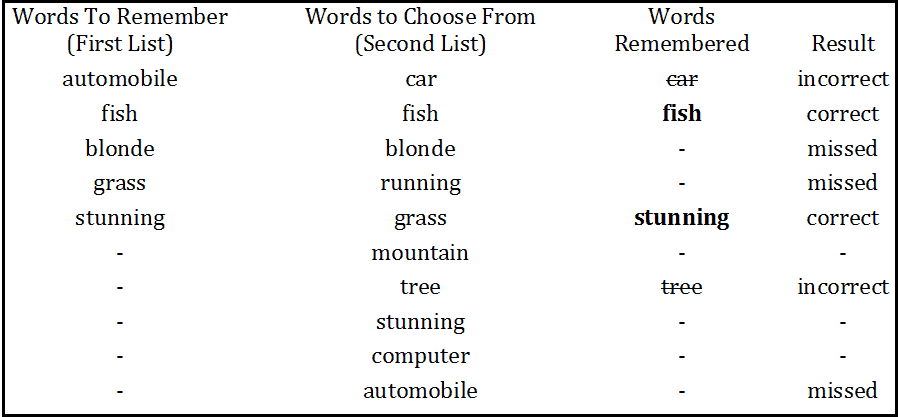

Suppose this is what my experiment looked like:

Recall = Number of items correctly identified / Number of items to be identified. (Note that this may also be referred to as sensitivity).

But that’s not really all there is to it. What about those incorrect guesses? What if I had just said that I thought all 10 of the words were on the first list? I would have 100 percent recall but I would have also made a lot of mistakes. Just saying that I correctly identified all of the terms is misleading. We also have to consider how specific I was in identifying the right terms. By this measure, I was only 50 percent precise because I made four attempts (car, fish, stunning, tree), of which only two were correct (fish, stunning). This leads us to the definition of precision which is:

Precision = Number of items correctly identified / Number of total attempts (Note that this may also be referred to as specificity)

Now let’s consider how this relates to computer assisted coding and what factors might affect your own Precision and Recall measurements. From our definitions, recall indicates what percentage of the final codes on a visit were in fact suggested by CAC. You may also see this referred to as “Coder Agreement Rate” which is really saying the same thing: “For what percentage of the codes on the visit did the coder agree with the engine?” Looking at this measurement will give you an idea of how completely the engine is identifying all of the codes. Here are some common things that could cause fluctuation in Recall.

- The first and most obvious factor is the capability of the engine itself – whether or not the engine is able to both identify and interpret the relevant clinical facts that lead to a particular code. It is important to understand though that the engine has to both IDENTIFY and INTERPRET the clinical evidence. These are two very distinct processes and often when a code isn’t properly suggested by the engine, it isn’t because the engine doesn’t know how to derive the code, it is more likely that the engine doesn’t “see” the proper evidence in the record that it needs to. The most common misses in suggesting codes come from these kinds of problems as we’ll see in the next couple of points.

- One reason that the engine may not consider all of the available evidence is that it can be stored in formats that the engine can’t completely read. Handwritten notes and scanned images are good examples of this. Because scanned or handwritten notes are in image formats, the engine is not able to read the text of the document as accurately. The images can be sent through Optical Character Recognition (OCR) software to convert them to text, but even at its best, OCR is subject to some amount of error that makes properly reading and splitting this type of document into proper sections to use for auto-coding a frequent source of “missed” auto-suggestions, no matter how obvious the evidence in these documents might be.

- Even with fully electronic documents containing the appropriate evidence, configuration issues may prevent the evidence from being considered. Set up of a robust CAC solution requires a fair amount of consideration to make sure the engine considers relevant evidence in auto-suggesting codes. Things like:

- Are the appropriate interfaces in place to send documents to the coding system?

- Once interfaces are set up, have definitions for the various types of documents been configured? (e.g. Has the system been configured to distinguish be between an Operative Report and Discharge Summary?)

- After defining document types, does the system understand, either by way of configuration or by its own ability, how to detect the various parts of the documents and treat each appropriately? (e.g. It will need to know how to treat a “Past Medical History” section from a “History of Present Illness” section). This activity, sometimes called “regioning and sectioning” plays a key role in making sure the evidence is presented to the engine in a way that can maximize the useful output it produces.

- Lastly, it is important to note that the setup and configuration of documents is not a one-time event. As new document templates and types are added or even changed within your electronic health record (EHR) and other systems, these steps of setting up interfaces, defining document types and defining document sections will need to be done for those new or changed documents. It’s a good idea to have a member of the HIM team informed as new document types are added and existing ones modified so they can assess the impact of those changes on coding and trigger this configuration process as needed.

In part two of this blog we will take a look at precision in CAC.

P.S. If you are a 360 Encompass customer and are wondering how your numbers compare, grab your 360 Encompass Performance Metrics from the support site and take a look! You can also visit us at the 2016 3M Client Experience Summit in the Collaboration Zone and we can talk through any questions you have together.

Jason Mark is a business intelligence architect with 3M Health Information Systems.