Inside Angle

From 3M Health Information Systems

AI Talk: Muppets and a boondoggle

This week’s AI Talk…

Muppet invasion in machine learning

In this week’s AI Talk, I’m going to do a deep dive into a hot topic in the field: It’s the quiet revolution brewing in one corner of the machine learning (ML) world related to an esoteric area dubbed “embeddings!” In the 1950s, a British linguist named John Rupert Firth made a famous statement: “You shall know a word by the company it keeps.” Sixty-five years later, the ML world gave concrete meaning to that quote! What exactly are “embeddings?” Embeddings are simply representations—initially of just words. And what is a representation? In the ML world, it is a vector of floats. In 2013, Tomas Mikolov published a seminal article on a scheme called “word2vec,” which kick-started the word representation race among data scientists. The word representations were learned by processing large corpus of text. How? Simply by observing and counting the context in which a word appears.

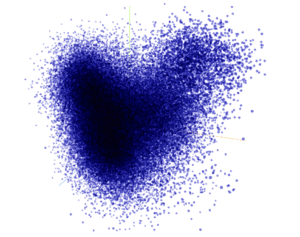

One of the interesting aspects of this representation is that you can solve analogy problems, all by doing vector manipulations. So you can do things like, “king:queen :: man: ?” and by computing the distance between “king” and “queen” and adding it to representation of “man” you land at “woman.” You can do similar math to determine the capital of countries. The image below is not a heart; it’s a visualization of word embeddings that was created using Tensorboard (a Google tool), based on a large vocabulary of clinical terms. Every dot below corresponds to a word in a multi-dimensional space!

Fast-forward half a dozen years, and the space of embeddings is evolving at breakneck speed. First there was a breakthrough architecture for neural nets called “Transformer” that used a new way to pay “attention” to specific items of the input. The notion of “attention” was fashioned after the human ability to pay attention to a specific object. This architecture provided State of the Art (SOTA) results in a variety of translation tasks (English->French, English->German). The transformer architecture currently underpins all the SOTA results emerging in embeddings research.

ELMo

The next representation leap for word embeddings was provided by the Allen Institute for Artificial Intelligence, called ELMo. Why a Muppet character? Well, it is an acronym for Embeddings from Language Models. Language models are tools in the natural language processing (NLP) world where a model is trained in an unsupervised fashion to predict the next word, given the context of a given word. ELMo was trained using a bidirectional language model—a model that uses both the left and right context of any given word. How does one evaluate these embeddings? Well, they use the learned representation to do a series of NLP tasks. This process is also called “transfer learning.” That is, can we use the learned embeddings to determine if a review for a product is positive or negative; can we use it to extract concepts such as name, place or any nouns (also called Named Entity Recognition (NER); can we use it to answer a question; etc. The problem of evaluating and comparing architectures became so intense in the past year that a new open source metric has been developed based on publicly available corpora, that evaluates NLP models in a collection of NLP tasks, called General Language Understanding Evaluation benchmark (GLUE).

BERT

With ELMo providing SOTA results in the middle of 2018, last fall saw the release of BERT. Yes you heard it right, another beloved Muppet character! It’s an acronym for Bidirectional Encoder Representations from Transformers. BERT, an architecture conjured up by the Google team, is also focused on creating embeddings, this time both word and sentence embedding. Sentence embedding, a vector representing the meaning of a sentence, can be used in a lot of contexts, like predicting the next sentence to generating and summarizing text. “Training of BERTLARGE was performed on 16 Cloud TPUs (64 TPU chips total). Each pretraining took 4 days to complete.” A TPU is a special purpose chip, designed by Google, specifically optimized for deep learning matrix computation. That’s a massive amount of computing power to create the BERT embeddings. The embeddings are learned using unsupervised techniques from a large corpus of text. Basic tricks? Predict random words in a sentence, given the context around it. Predict the next sentence given the previous sentence. Both techniques are easy to engineer, as you only need plain text documents (e.g. wiki pages). But the real kicker is, using the learned embeddings, applying it to a collection of other NLP (GLUE) tasks, they have achieved SOTA results. You can do a real deep dive on BERT by exploring the Google blog or this one.

The competition is just heating up and already a whole variety of transformer-based work has been published that improves the SOTA in various ways. I wonder if Cookie Monster is next? Or Kermit the Frog?

36 billion dollar boondoggle

This week, Fortune published a scathing indictment of the state of EHRs. For those of us in the trenches, nothing in the article is really new! Though the EHR eliminated some types of errors, it introduced many more. Documentation bloat and upcoding were noticed. EHR usability is a well-documented problem And the attendant physician burnout rate is well known. Information blocking is commonplace. This quote by David Blumenthal, former head of the ONC, is telling: “like asking Amazon to share their data with Walmart.” I remember hospital execs referring to patients in their vicinity as “catchment area!” They had a proprietary hold on patients. Not everything is doom and gloom though. The rise of speech recognition interfaces and scribing solutions is helping, but one has to wonder if we missed an opportunity to overhaul our digital universe in health care. The U.S. government spent $36 billion on this front, which unfortunately appears more and more like a boondoggle to the EHR vendors.

Acknowledgements:

The suggestion for an edublog that is relevant for our organization came from my boss, Detlef Koll. From time to time, I will do a deep dive on a topic to provide a layman’s overview. The embeddings figure is from my colleague and friend, Russell Klopfer. Multiple colleagues pointed me to the Fortune article, including Philippe Truche and Brian Ellenberger.

I am always looking for feedback and if you would like me to cover a story, please let me know. “See something, say something”! Leave me a comment below or ask a question on my blogger profile page.

V. “Juggy” Jagannathan, PhD, is Vice President of Research for M*Modal, with four decades of experience in AI and Computer Science research.