Inside Angle

From 3M Health Information Systems

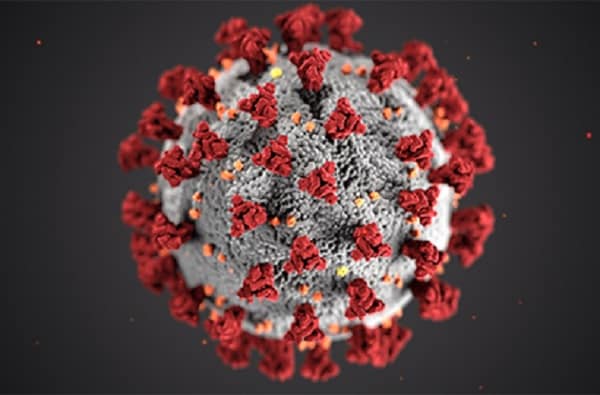

AI Talk: Forecasting and estimating

I want to highlight two different AI applications this week—one involving forecasting COVID-19 cases and the other to help estimate all of the calories you eat while being forced to be home staring at all the stuff in your pantry and fridge.

New COVID-19 forecasting model

Google Cloud AI division has teamed up with Harvard Global Health Institute to release a new COVID-19 forecasting model. This model is intended as a resource for policy makers in each U.S. county to make real-time decisions on how to handle the pandemic. You can check out their forecast for your county here. There are lots of models out there – the question is, what is unique about this model? You can learn details about their approach in this fairly long white paper. They have adapted a century-old approach called the SEIR (susceptible, exposed, infected, recovered) model to add additional facets (they call it compartments) like undocumented infected, recovered undocumented, hospitalized, ICU use, ventilator use, etc. It is a fairly detailed model and the data for it comes from a range of public datasets and from every corner of U.S. The datasets include Johns Hopkins COVID-19 data, mobility data, policy information for every locality, demographics data broken down into county levels, air quality data from EPA, econometric data (poverty and affluence levels) from U.S. Census, hospital resource availability and confirmed cases of death.

The compartmentalized breakdown of the model characteristics allows them to explain the predictions generated, which is a big plus for policy makers. Each one of their variables (they call them covariates) is a variable time series—for example, the rate of infection in a particular county varies by time based on policy decisions such as mandatory mask wearing. Given the level of detail their model incorporates, they are able to accurately predict rates of infection in poor and minority communities who have been hardest hit by this pandemic. Perhaps this will be a tool used by school districts to determine whether or not it is safe to reopen.

Deciphering your plate

This week, an article in AI in Healthcare caught my attention. It’s about an AI-powered app called goFood. Researchers at the University of Bern (and University of Maryland) have developed a deep learning solution to figure out what is on your plate. You simply provide two photos using a smart phone or a short video clip and voilà—you get a listing of what is on your plate and its nutritional content. I always thought it was just a matter of time before we’d have such an app. I have, as I am sure a whole lot of folks out there have, tried various calorie tracking apps in a vain attempt to lose a few extra pounds. With these current apps, you still have to tell the app what you are eating and the portion size down to ounces. No more with this new app.

Using a convolutional neural network, the researchers at Bern segment the image into constituent food items, estimate the volume of the items and then determine the nutritional constituents and calories. You can read all about their work here (the app is not yet available for public use). The results are as good or better than trained dieticians. They do a pretty good job on pictures of fast food. Of course, the algorithm relies heavily on recognizing the food item. They use a three-level hierarchy: first level are things like bread, pasta, dairy, etc., and the second and third levels expand on those items. The app, however, has been developed and tested using primarily European diets as a base. The authors note it is virtually impossible to capture the rich diversity in food across all countries and cultures, but I was heartened to see rice, biryani and samosa in their paper, so perhaps Indian food is covered! However, in one instance they seem to have recognized a chicken wing as samosa, which doesn’t inspire confidence. Free advice to the designers of this system: Incorporate dietary habits of the customer whose plate they are predicting. I am sure the system will get better with time.

I am always looking for feedback and if you would like me to cover a story, please let me know. “See something, say something!” Leave me a comment below or ask a question on my blogger profile page.

V. “Juggy” Jagannathan, PhD, is Director of Research for 3M M*Modal and is an AI Evangelist with four decades of experience in AI and Computer Science research.